1

Introduction

A distributed system is where multiple computers/systems communicate and try to achieve a task together.

You could see it as a system that consists of multiple nodes/parts.

Why a distributed system:

Some systems are inherently distributed.

For better reliability.

- Can still work if one of the nodes is failing.

For better performance.

- Closer to the users.

To solve bigger problems.

Challenges however come with building distributed systems, i.e. dealing with the failure of communication between the nodes.

Computer Networking

The foundation of distributed systems is having multiple different nodes communicating with each other through messages they send.

Two things we can measure when sending messages:

Latency: Time until the message arrives.

Bandwidth: Data volume per unit of time.

Remote Procedure Call (RPC)

RPC is when a service sends a request to another service to perform something, expecting a result to come back. In JavaScript, REST API is common, between the client and server.

This could also happen between servers. Server-to-server communication. Since they may use different programming languages, some servers communicate using interface description language (IDL). It is a language that can be translated into multiple different programming languages. Language-independent API specification.

2

The two generals' problem

A problem in distributed systems is that there is no common knowledge between nodes. The only way another node can know something is if the other node communicates it to that node. However, that node can only know it communicated to the other node if the other node communicates back.

The military general's analogy is often used to describe this problem. Two armies communicating via messengers.

The Byzantine generals' problem

When multiple nodes communicate with each other, a problem we have is trust. We can not be sure if a node is malicious and sent the correct message, or whether it is lying and trying to destroy things.

System models

In distributed systems, we need to pick one choice (assumption) from the three different system models.

Network: Reliable, fair-loss, or arbitrary.

Nodes: Crash-stop, crash-recovery, or byzantine.

Timing: Synchronous, partially synchronous, or async.

Fault Tolerance

Failure means the entire system isn't working. A fault is when a part of the system isn't working correctly, however, the system still works.

Single points of failure are places where a fault could happen and the system wouldn't be working. We can detect such nodes using a failure detector. Failure detectors aren't perfect but can be described as eventually excellent.

3

Physical time

Distributed systems often have to measure time, e.g:

Schedulers, timeouts, failure detectors, retry timers

Performance measurements, profiling

Log files and databases

We distinguish two types of clocks:

Physical clocks: count the number of seconds elapsed

Logical clocks: count events

Computers commonly represent timestamps through:

Unix time

ISO 8601

Clock synchronization

Computers track physical time with a quartz clock. Due to click drift, clock error gradually increases.

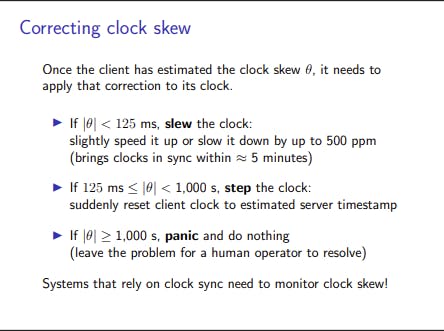

Clock skew is the difference between two clocks at a point in time. The solution here is to adjust the client's time. The client would from time to time get the current time from a server that has a more accurate time source. (atomic clock or GPS receiver)

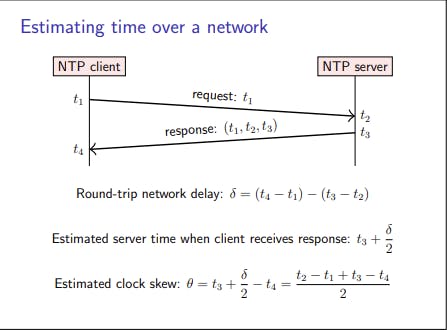

We do clock synchronization in practice by using a protocol such as Network Time Protocol.

Network Time Protocol

Servers are arranged into Strata. Stratum 0 has an accurate time using an atomic clock or GPS receiver. Stratum 1 is synced directly with Stratum 0, and 2 with 1, and it goes on like that.

Techniques to get the accurate time:

Query multiple servers

Query the same server multiple times

There is a difference between the two clocks, Monotonic and time-of-day clocks.

Time-of-day clock:

Time since a fixed date

May suddenly move forwards or backward (NTP stepping)

Timestamps can be compared across nodes (if synced)

Monotonic clock:

Time since an arbitrary point

Always moves forward at a near-constant rate

Suitable for measuring elapsed time on a single node

Causality and happens-before

Causality refers to the relationship between events where one event is necessary for the other event to occur.

Happens-before is a model used to establish the order of events in a distributed system, where one event must have occurred before the other event.

4

Logical time

Logical time is used in distributed systems to establish the order of events that occur across different parts of the system, regardless of differences in physical time.

Lamport's Clock: assign a timestamp to each event.

Vector Clocks: More complex, and requires more storage, however, can provide a more complete ordering of events than Lamport's Clock.

Broadcast ordering

Broadcast protocols are a set of rules that govern how messages are sent and received in a distributed system.

Total ordering

Causal ordering

FIFO ordering

Reliable broadcast

Atomic broadcast

Broadcast algorithms

Techniques to ensure that messages are being sent in a specific order.

5

Replication

Replication is a technique used to increase availability and fault tolerance by creating multiple copies of data and distributing them across different nodes in a network.

There are challenges involved here such as consistency when data gets updated and dealing with retries.

Quorums

Quorums in distributed systems refer to the concept of a minimum number of nodes (or replicas) that must be available for a system to function properly, which is used to ensure that a distributed system can continue to operate even in the presence of failures or network partitions.

State machine replication

State Machine Replication (SMR) is a technique that replicates the state of a service or application across multiple nodes to ensure high availability and fault tolerance in a distributed system. Replicas in SMR are state machines because they are responsible for maintaining and updating the state of the service or application, which is replicated across multiple nodes.

6

Consensus

Consensus in distributed systems refers to the process of reaching an agreement among all nodes in a distributed system on a single value or decision. It is a fundamental problem in distributed systems and is necessary for ensuring consistency and fault tolerance in a distributed system.

Raft

Raft is a consensus algorithm that is designed to be easy to understand and is used in distributed systems to ensure that all nodes in a distributed system agree on a single value or decision, even in the presence of failures or network partitions. It is based on a leader-follower model, where one node is elected as the leader and the other nodes are followers.

7

Two-commit phase

Two-commit phase is a term used in consensus algorithms to ensure that a value or decision is committed to the state of the system only when it has been acknowledged by a majority of the nodes in the system.

Linearizability

Linearizability is a strong consistency model. It ensures that all nodes in the system have the same view of the shared data at any point in time.

Eventual consistency

Eventual consistency states that the data will eventually be consistent across all nodes, however, there may be a point in time when the nodes have different views of the data.